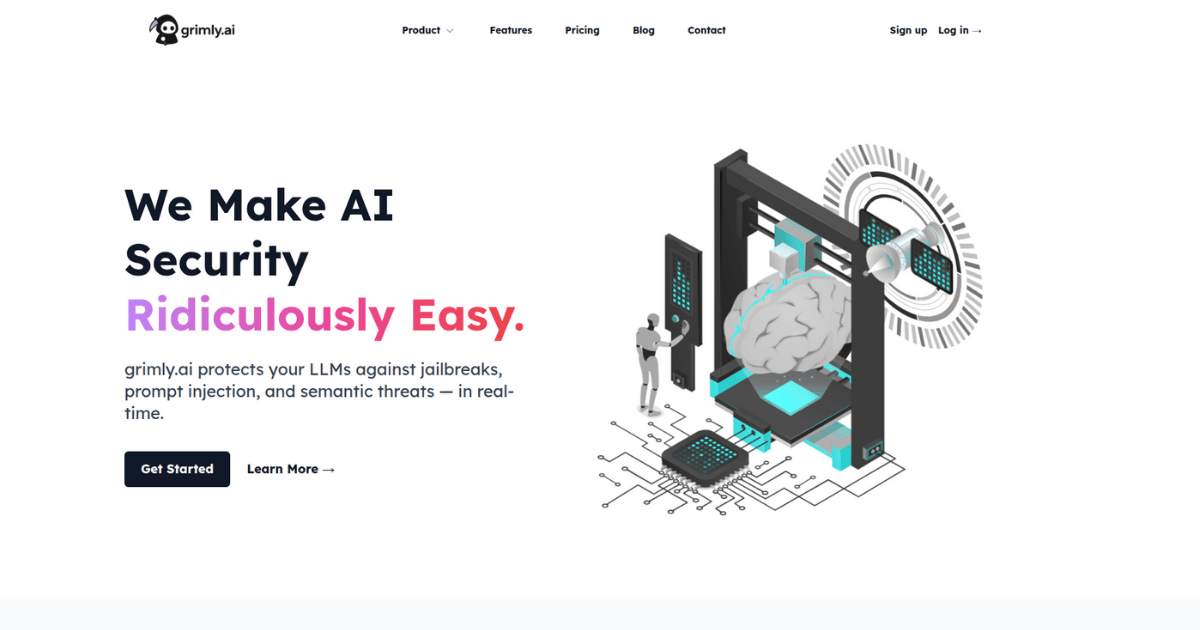

grimly.ai

grimly.ai is a LLM security platform that protects AI systems from prompt injection.

grimly.ai provides robust AI security, protecting LLMs against jailbreaks, prompt injection attacks, and semantic threats in real-time. It acts as a safety net for your LLM stack, ensuring the integrity and reliability of your AI applications. The platform offers multi-layered defense with zero guesswork.

grimly.ai offers features like semantic threat detection, prompt injection firewall, and a flexible rule engine. It provides visibility and logging of every attack attempt, making it audit-friendly. Ideal users include enterprises, agencies, and small businesses looking to secure their AI integrations and protect their AI agents.

grimly.ai offers features like semantic threat detection, prompt injection firewall, and a flexible rule engine. It provides visibility and logging of every attack attempt, making it audit-friendly. Ideal users include enterprises, agencies, and small businesses looking to secure their AI integrations and protect their AI agents.

- Detect semantic threats using embedding similarity and adversarial rewrite protection.

- Implement prompt injection firewall using fuzzy logic and character class mapping.

- Define flexible rules for blocklists, rate limits, and response overrides.

- Log every attack attempt with exact bypass method for audit trails.

- Guard system prompts to keep base instructions private from attackers.

No video tutorial available for this AI tool yet.

We're working on adding video tutorials for this tool.

- ProtectAI

- Lakera

- promptarmor